Is AI Intelligent? Wrong Question.

A thermostat maintains a temperature.

A bacterium swims toward nutrients.

A human plans a career.

None of these are intelligent in the same way - but none of them are not intelligent either.

The mistake is asking where intelligence begins.

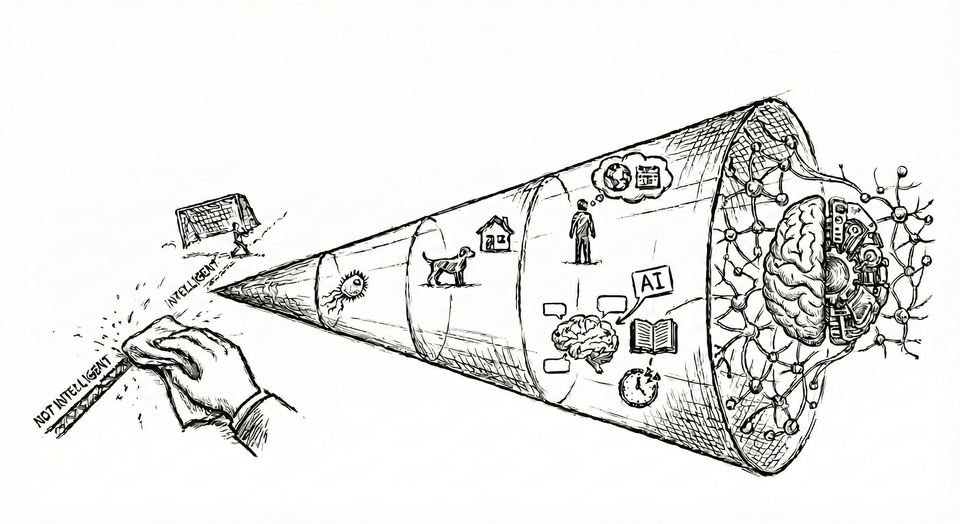

"Is today's AI intelligent? When will AI become intelligent?"

Both questions assume a line to cross - a moment when something flips from not-intelligent to intelligent.

Every time we draw that line, it moves. Not because the systems changed, but because the line was always arbitrary.

The Moving Goalposts

Chess was supposed to be the marker. Machines could never play chess. It required intuition, strategy, the ineffable human ability to think ahead. Then Deep Blue won - and overnight, chess became “just calculation”.

Image recognition followed the same path. Useful, but machines do not really see.

Conversation? Impressive, but not real understanding.

Code generation, scientific reasoning, creative writing - each advance arrives with a familiar qualifier: not truly intelligent, not really understanding.

This is the pattern: when machines succeed, we quietly change the definition of intelligence.

We have done this before - nature vs. nurture, conscious vs. not conscious, natural vs. artificial.

Now: intelligent vs. not intelligent.

What Actually Varies

A large language model can explain quantum field theory and write legal briefs. But ask it to count the r's in "strawberry" and it stumbles.

Binary thinking forces us to either dismiss it ("just autocomplete") or inflate it ("basically human"). Both miss the point: a kind of competency that does not fit either box.

William James captured the essence more than a century ago: intelligence is the ability to reach the same goal by different means. If something has a goal, encounters an obstacle, and tries to find a way around it - we are looking at some kind of intelligence.

This is not a definition. It is a detector - a minimum signal that intelligence is present. It ignores origin and labels. Natural or artificial. Evolved or engineered. What matters is behavior.

A thermostat has a goal and responds to obstacles, but its response is fixed. Minimal intelligence, if any.

A rat in a maze tries, fails, remembers, adjusts. More interesting - clear flexibility, something like search.

A crow that encounters a tube with food at the bottom it cannot reach, then bends a wire into a hook to retrieve it - unambiguously intelligent. Novel solution to a novel problem.

A human who questions whether the goal itself makes sense - that is a different kind of intelligence. Not just solving problems, but choosing which problems to solve.

...

What makes these different? Why is the crow's intelligence a different kind than the rat's?

That question is hard to answer - not because we lack a definition of intelligence, but because we lack a framework to compare different kinds of intelligence.

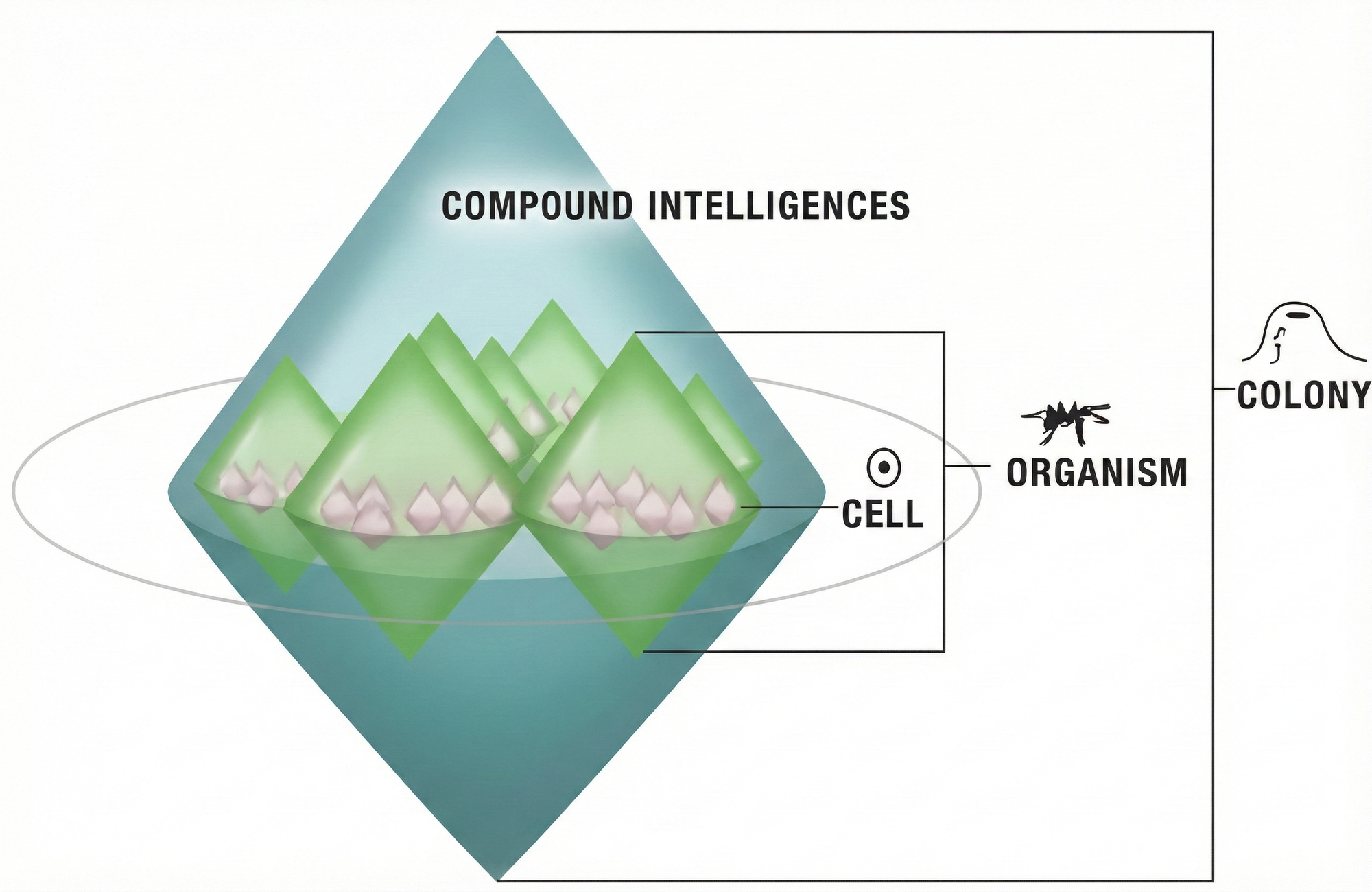

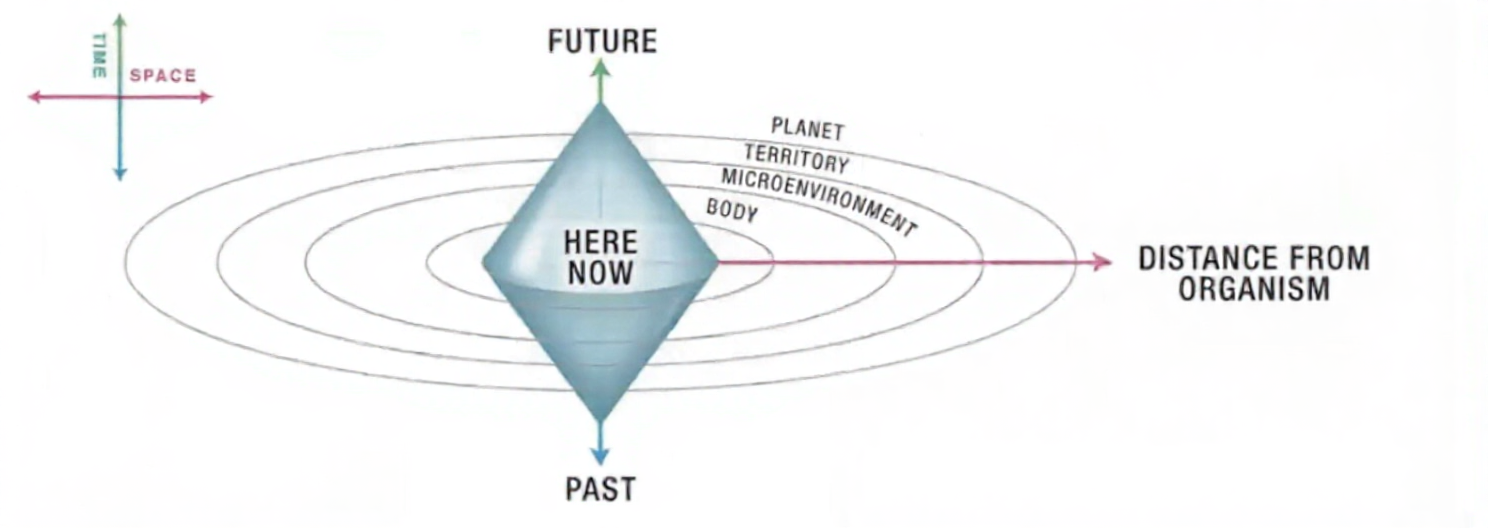

The Cognitive Light Cone

Behavior can tell us if something is intelligent. That much is clear.

But what varies between different kinds of intelligence is not "smartness" - it is scope: what goals a system can even conceive and pursue.

Michael Levin calls this the cognitive light cone.

It lets us compare any intelligence - biological, artificial - regardless of substrate or origin. The measure is not physical reach (how far you can sense or move) but the boundaries of possible goals.

To better understand, let's go through some examples:

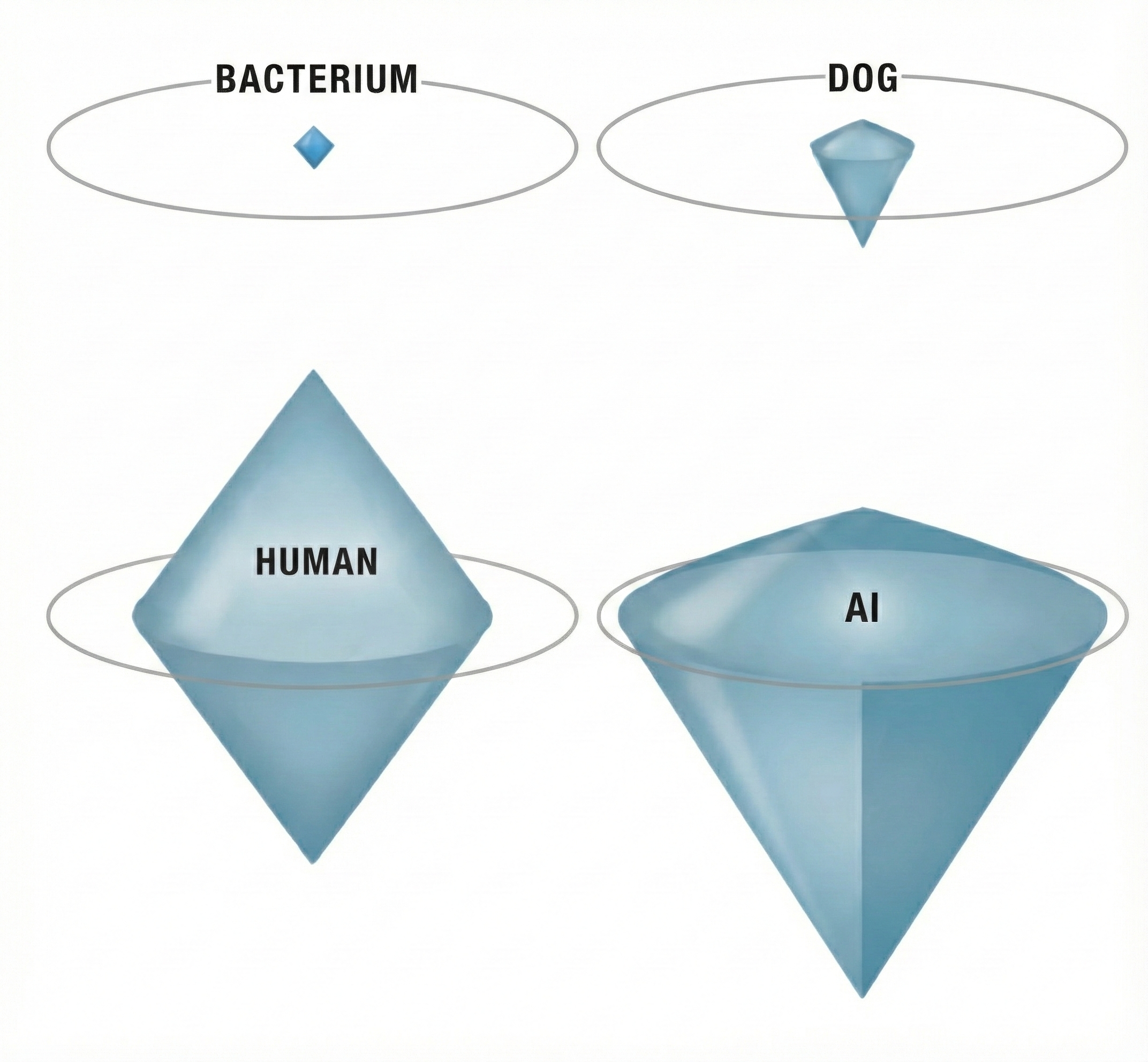

A bacterium pursues simple, immediate goals: swim toward nutrients, away from toxins. Its cognitive light cone spans micrometers and seconds. It has no memory beyond moments ago, cannot plan a route, cannot conceive of anything beyond its immediate vicinity. Everything outside that tiny sphere is literally inconceivable.

A dog pursues richer goals. Protect territory. Get the next meal. Remember yesterday's good spot and return to it. These goals span its house, yard, daily routines. But it cannot plan months ahead - store food for winter, return to this spot next spring. That future is beyond what it can conceive.

A human pursues goals dogs cannot conceive. Plan careers across decades. Write for people will never meet. Build for grandchildren not yet born. We extend through time using symbols, plans, institutions. But our light cone has edges too. We cannot track an entire continent simultaneously. Cannot hold a thousand moving parts in mind at once. Try planning for your grandchildren with the same detail you plan your week - the future dissolves into vague hopes. Try operating at millisecond timescales - your neurons cannot keep up. Broader than the dog's cone, but bounded.

A large language model has a cognitive light cone unlike any biological intelligence. It can synthesize research across fields, connect ideas across centuries, explain systems no single human could. Vast reach through documented knowledge. But it cannot pursue goals beyond the immediate response. It can reason about multi-year strategies, discuss long-term problems, plan across any timescale. But cannot pursue those goals - cannot monitor what happens, adjust, maintain direction over time. Can model vast futures but cannot act toward them autonomously.

...

This is what we gain by abandoning the binary. Not "is it intelligent?" but "what goals can it pursue? What futures can it model? Where are its boundaries?".

A bacterium and an LLM are both intelligent and both limited - in completely different dimensions. Asking which is "more intelligent" is like asking whether wide or tall is "more".

Combination, Not Improvement

Can cognitive light cones expand?

Yes - and one powerful mechanism is combination, not individual improvement.