Why AI Works Until It Doesn't

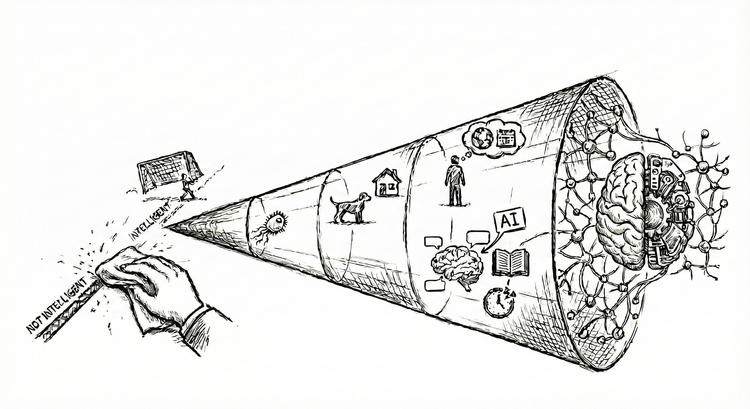

In our article "Is AI Intelligent? Wrong Question.", we explored how intelligence is not binary but dimensional. A bacterium, a dog, a human, AI - all are intelligent, yet differ radically in the goals they can conceive and pursue.

But understanding intelligence as dimensional does not tell us how it actually operates. And how it operates determines something crucial: whether it can remain intelligent as conditions change.

Modern AI demonstrates extraordinary capability - until conditions drift and performance degrades. Then it waits for humans to notice and intervene. Powerful, yes. But something is missing.

The answer points to something fundamental about intelligence itself.

The Pattern

Watch a two-year-old in a playground. They do not head straight for the slide and optimize their way down. They try climbing up the slide backward. They drop objects from different heights to see what happens. They test, explore, experiment - building a model of how the world works through relentless curiosity.

Now watch a chef during dinner service. No experimentation. Every movement precise, every technique proven. Sear, flip, plate - muscle memory executing hundreds of refined decisions. The goal is clear: deliver perfect dishes efficiently. This is mastery - exploiting accumulated knowledge to achieve outcomes reliably and fast.

Finally, watch a grandmother with a curious toddler. She is not pursuing her own goals. She is shaping the environment - preventing harm, removing hidden dangers, answering endless "why" questions - so exploration can happen safely. Walking along a quiet street, she lets the child wander, pause, examine - until the crossing ahead turns red. She takes the toddler's hand, the wandering stops - now they wait, then cross. The environment changed. The wandering stopped, the crossing began - exploration shifted to execution.

Three distinct behaviors. Three modes of intelligence.

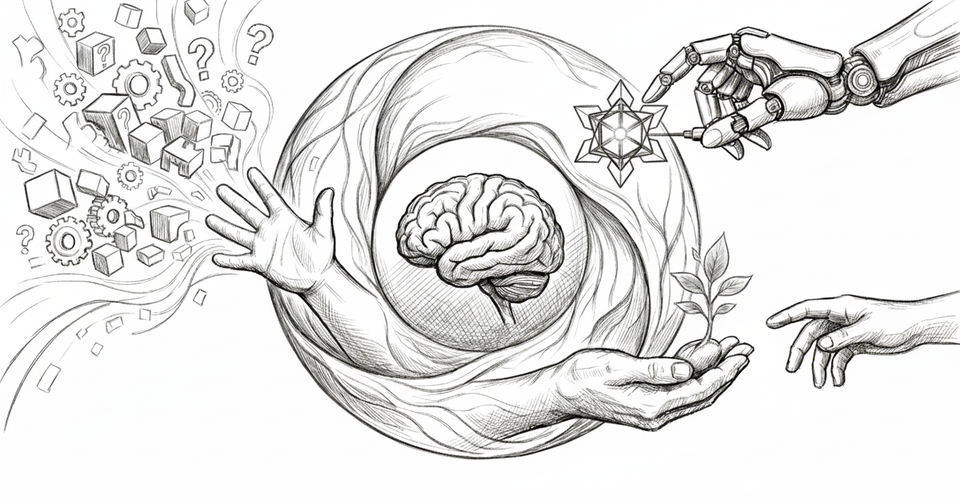

Psychologist Alison Gopnik describes them as fundamental: the child's curiosity that explores, the adult's focus that exploits, and the caregiver's role that empowers. Together, they reveal intelligence not as a single capability, but as a cycle - a continuous exchange between discovery, action, and guidance.

Why does intelligence organize itself this way? Because learning, performance, and continuity pull in opposing directions. No single optimization can serve all purposes at once. Intelligence must shift between them.

The Cycle

Exploration is intelligence operating before it knows what matters. Its objective: understand, not succeed. Seek information, causal structure, latent possibilities. The child climbing up the slide is not optimizing for speed or safety. They are expanding the space of future action, building world models that show how actions lead to outcomes.

But exploration is expensive. It consumes time, delays reward, introduces uncertainty. Which is why intelligent systems cannot remain in exploration indefinitely. At some point, learning must give way to doing.

Exploitation is intelligence operating once the world is sufficiently understood. It narrows focus, reduces variance, and applies accumulated knowledge with speed and precision. The chef in dinner service does not experiment. The surgeon does not improvise mid-procedure. They execute what is known to work.

This is the mode we most readily recognize as competence. Exploitation thrives under stability - when environments are predictable and goals well-defined, it delivers extraordinary value. But it carries a hidden assumption: that the world remains sufficiently unchanged. When conditions shift, it applies yesterday's solutions to today's problems, not from error, but because it has no mechanism to notice.

Empowerment operates beyond immediate tasks. It preserves and shapes conditions for sustained adaptation. The grandmother does not explore or exploit. She transfers knowledge - answering questions, demonstrating, teaching - while governing when exploration must pause and execution must begin. She creates the conditions in which both modes can happen effectively: safe exploration here, timely execution there. As environments change, she reshapes these constraints in response.

This creates the cycle: explore until understanding is sufficient, exploit until it becomes insufficient, return to exploration. The loop that sustains intelligence.